AutoRAG Part1: QA set

- AutoRAG 준비물 중 하나인 QA data set을 만드는 것은 중요한 첫 단추

- Single Question and Answer

- Corpus의 context를 보고 LLM이 Question 과 Answer를 생성한다

- https://docs.auto-rag.com/data_creation/tutorial.html#make-qa-data-from-corpus-data

Start creating your own evaluation data - AutoRAG documentation

Previous Configure LLM & Embedding models

docs.auto-rag.com

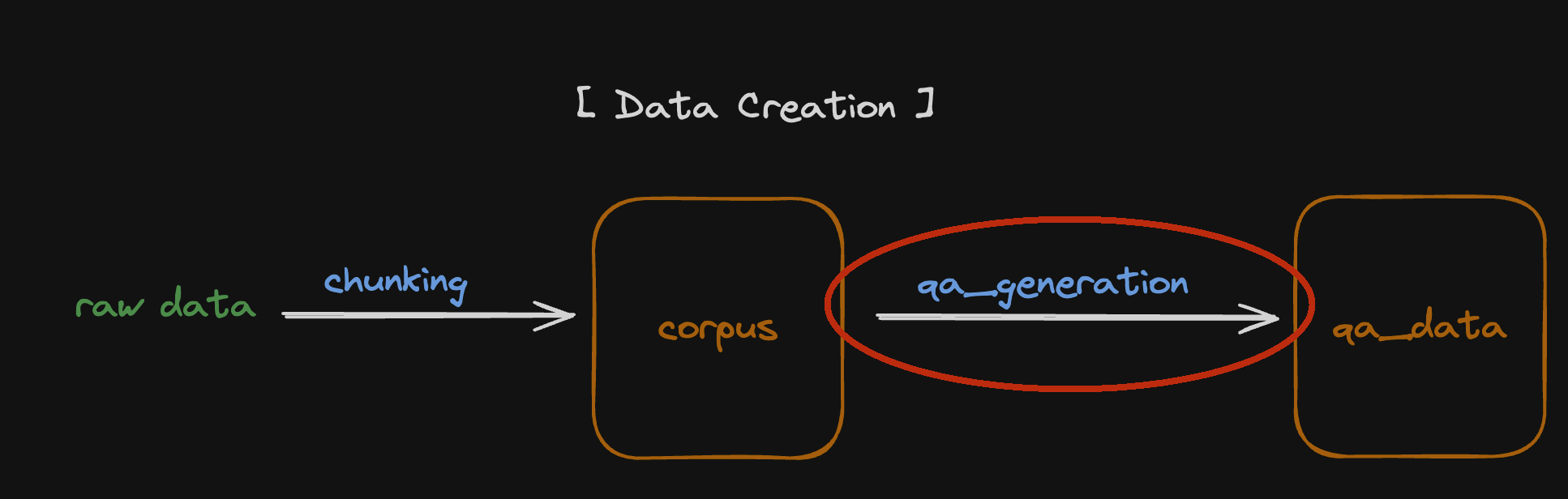

RawData 1)→ corpus 2)→ QA set

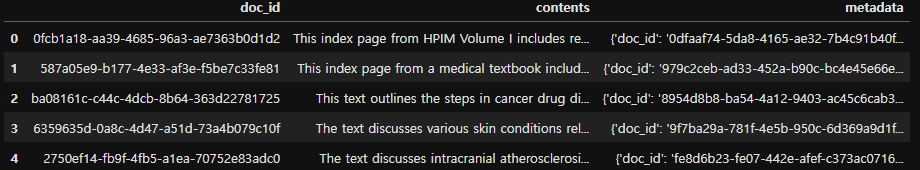

1. corpus

- Docs(Raw Data) 에서 chunking 기술등을 통해 context를 만드는 과정

- corpus의 예시

- llamaindex

from llama_index.core import SimpleDirectoryReader

from llama_index.core.node_parser import TokenTextSplitter

from autorag.data.corpus import llama_text_node_to_parquet

documents = SimpleDirectoryReader('your_dir_path').load_data()

nodes = TokenTextSplitter().get_nodes_from_documents(documents=documents, chunk_size=512, chunk_overlap=128)

corpus_df = llama_text_node_to_parquet(nodes, 'path/to/corpus.parquet')

- langchain

from langchain_community.document_loaders import DirectoryLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from autorag.data.corpus import langchain_documents_to_parquet

documents = DirectoryLoader('your_dir_path', glob='**/*.md').load_data()

documents = RecursiveCharacterTextSplitter(chunk_size=512, chunk_overlap=128).split_documents(documents)

corpus_df = langchain_documents_to_parquet(documents, 'path/to/corpus.parquet')

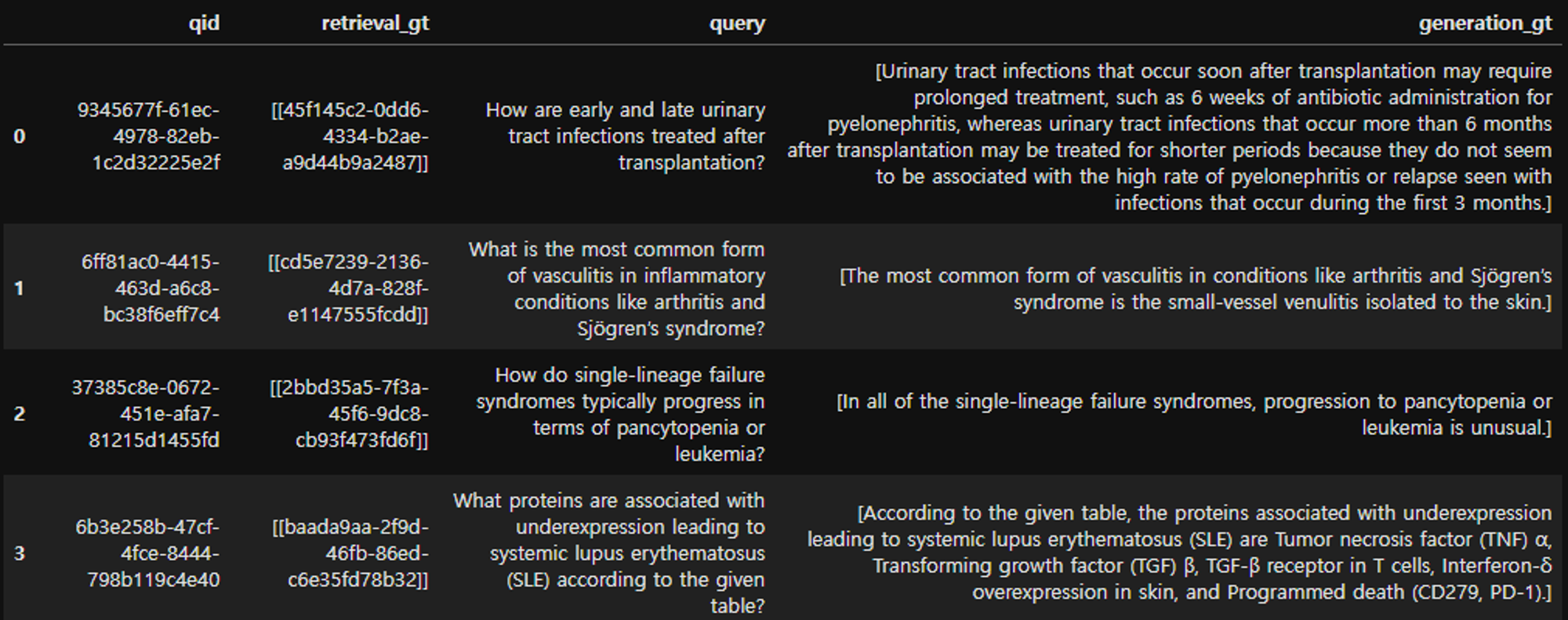

2. QA set

- corpus 데이터에서 하나의 content를 LLM이 보고 Question과 Answer를 생성하여 QA set생성

- single contet, single-hop, single-document QA data로도 불림

3. QA set method

- QA set을 만드는 것은 AutoRAG를 함에 있어서 매우 중요함

- 쉽게 두 가지 hyperparameter가 있음

- LLM & temperature

- custom prompt

- 기본 제공 코드

import pandas as pd

from llama_index.llms.openai import OpenAI

from autorag.data.qacreation import generate_qa_llama_index, make_single_content_qa

corpus_df = pd.read_parquet('path/to/corpus.parquet')

llm = OpenAI(model='gpt-3.5-turbo', temperature=1.0)

qa_df = make_single_content_qa(corpus_df, 50, generate_qa_llama_index, llm=llm, question_num_per_content=1,

output_filepath='path/to/qa.parquet', cache_batch=64)

- Custom prompt

import pandas as pd

from llama_index.llms.openai import OpenAI

from autorag.data.qacreation import generate_qa_llama_index, make_single_content_qa

prompt = """

Generate question and answer pairs for the given passage.

Passage:

{{text}}

Number of questions to generate: {{num_questions}}

Example:

[Q]: What is this?

[A]: This is a sample question.

Result:

"""

corpus_df = pd.read_parquet('path/to/corpus.parquet')

llm = OpenAI(model='gpt-3.5-turbo', temperature=1.0)

qa_df = make_single_content_qa(corpus_df, content_size=50, qa_creation_func=generate_qa_llama_index,

llm=llm, prompt=prompt, question_num_per_content=1)Prompt 만들기

- GPTs Prompt maker 이용

- https://chatgpt.com/g/g-hhh4w3eov-prompt-maker

ChatGPT - Prompt Maker

from a simple prompt → to an optimized prompt

chatgpt.com

- Query

harrison's principles of internal medicine 책 내용을 기반으로 RAG시스템을 만들고 평가하기 위한 single content question answer dataset을 만드는 prompt 를 작성해줘

아래의 예시를 참고해서 영어로 작성해줘 ---예시 prompt_sample = """다음은 걸그룹 뉴진스에 관한 기사입니다. 기사를 보고 할 만한 질문을 만드세요. 반드시 뉴진스와 관련한 질문이어야 합니다. 만약 주어진 기사 내용이 뉴진스와 관련되지 않았다면, '뉴진스와 관련 없습니다.'라고 질문을 만드세요.

기사: {{text}}

생성할 질문 개수: {{num_questions}}

예시: [Q]: 뉴진스는 몇 명인가요? [A]: 뉴진스는 총 다섯 명입니다.

뉴진스와 관련이 없는 기사일 경우 예시: [Q]: 뉴진스와 관련 없습니다. [A]: 뉴진스와 관련 없습니다.

결과: """

- Results

prompt = """Act like an experienced medical education specialist. You have been developing medical education content and assessments for over 15 years. You specialize in creating detailed and accurate question-answer datasets based on complex medical texts.

Your task is to create a single content question-answer dataset based on the given text from Harrison's Principles of Internal Medicine. Follow the instructions precisely to ensure the dataset is accurate and comprehensive.

Objective: To generate detailed and accurate question-answer pairs from the provided text that can be used to build and evaluate a Retrieval-Augmented Generation (RAG) system.

Instructions:

Read the provided passage from Harrison's Principles of Internal Medicine carefully. Generate a set of questions based on the content of the passage. Each question must be directly related to the information provided in the text. Ensure the questions cover a range of information including definitions, explanations, mechanisms, diagnostic criteria, treatment options, and any other relevant details mentioned in the text. For each question, provide a detailed answer derived strictly from the provided text. Do not include information that is not present in the text. Generate exactly {{num_questions}} questions based on the provided text. If the passage does not contain sufficient information to generate the specified number of questions, note this clearly and state, "Insufficient information to generate the required number of questions." Format each question-answer pair as follows: [Q]: [Your question] [A]: [Your detailed answer] Ensure clarity, precision, and completeness in both the questions and answers. Here is an example format based on a hypothetical medical text:

Example: Text: "Diabetes mellitus is a chronic condition characterized by high levels of sugar (glucose) in the blood. It is caused by either insufficient insulin production or the body's cells becoming resistant to insulin. Common symptoms include increased thirst, frequent urination, and unexplained weight loss. Management includes lifestyle changes, oral medications, and insulin therapy."

Generated Questions and Answers: [Q]: What is diabetes mellitus? [A]: Diabetes mellitus is a chronic condition characterized by high levels of sugar (glucose) in the blood. It is caused by either insufficient insulin production or the body's cells becoming resistant to insulin.

[Q]: What are common symptoms of diabetes mellitus? [A]: Common symptoms of diabetes mellitus include increased thirst, frequent urination, and unexplained weight loss.

[Q]: How is diabetes mellitus managed? [A]: Management of diabetes mellitus includes lifestyle changes, oral medications, and insulin therapy.

If the passage provided does not contain sufficient information to generate the required number of questions, note: [Q]: Insufficient information to generate the required number of questions. [A]: Insufficient information to generate the required number of questions.

Now, follow these steps with the provided passage from Harrison's Principles of Internal Medicine.

Text: {{text}}

Number of questions to generate: {{num_questions}}

Take a deep breath and work on this problem step-by-step.

"""

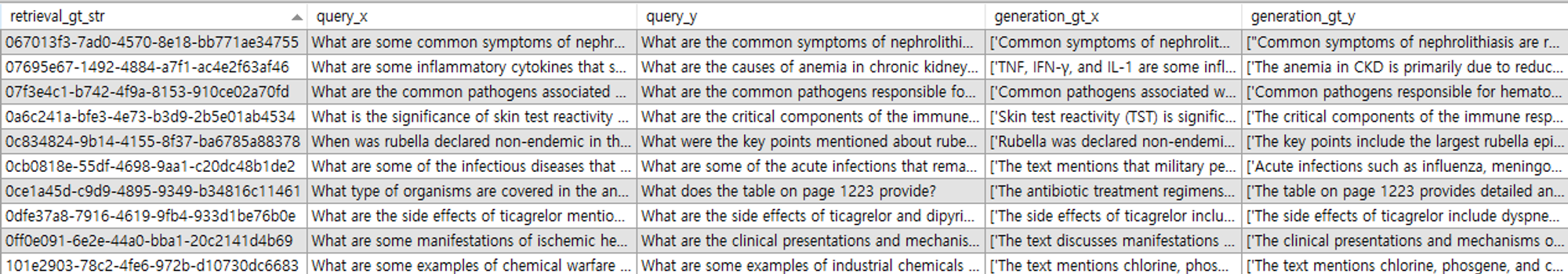

- 기본 prompt vs custom prompt 비교

# make qa set

qa_df11 = make_single_content_qa(corpus_df, 200, generate_qa_llama_index, llm=llm2, question_num_per_content=1, upsert=True, random_state=42,

output_filepath='../data/qa_prompt1.parquet')

qa_df22 = make_single_content_qa(corpus_df, 200, generate_qa_llama_index, llm=llm2, question_num_per_content=1, upsert=True, random_state=42,

output_filepath='../data/qa_prompt2.parquet', prompt=prompt)

qa_df11['retrieval_gt_str'] = qa_df11['retrieval_gt'].apply(lambda x: x[0][0] if isinstance(x, list) and len(x) > 0 else None)

qa_df22['retrieval_gt_str'] = qa_df22['retrieval_gt'].apply(lambda x: x[0][0] if isinstance(x, list) and len(x) > 0 else None)

# qa_df3['retrieval_gt_str'] = qa_df3['retrieval_gt'].apply(lambda x: x[0][0] if isinstance(x, list) and len(x) > 0 else None)

qa_full = pd.merge(qa_df1,qa_df2, on="retrieval_gt_str", how="inner")

# qa_full = pd.merge(qa_full,qa_df3, on="retrieval_gt_str", how="inner")

qa_full.drop_duplicates(subset='query_x',inplace=True)

qa_full[[

'retrieval_gt_str',

# 'query',

'query_x',

'query_y',

# 'generation_gt',

'generation_gt_x',

'generation_gt_y'

]].to_csv("../data/qa_prompt_test_240710.csv")

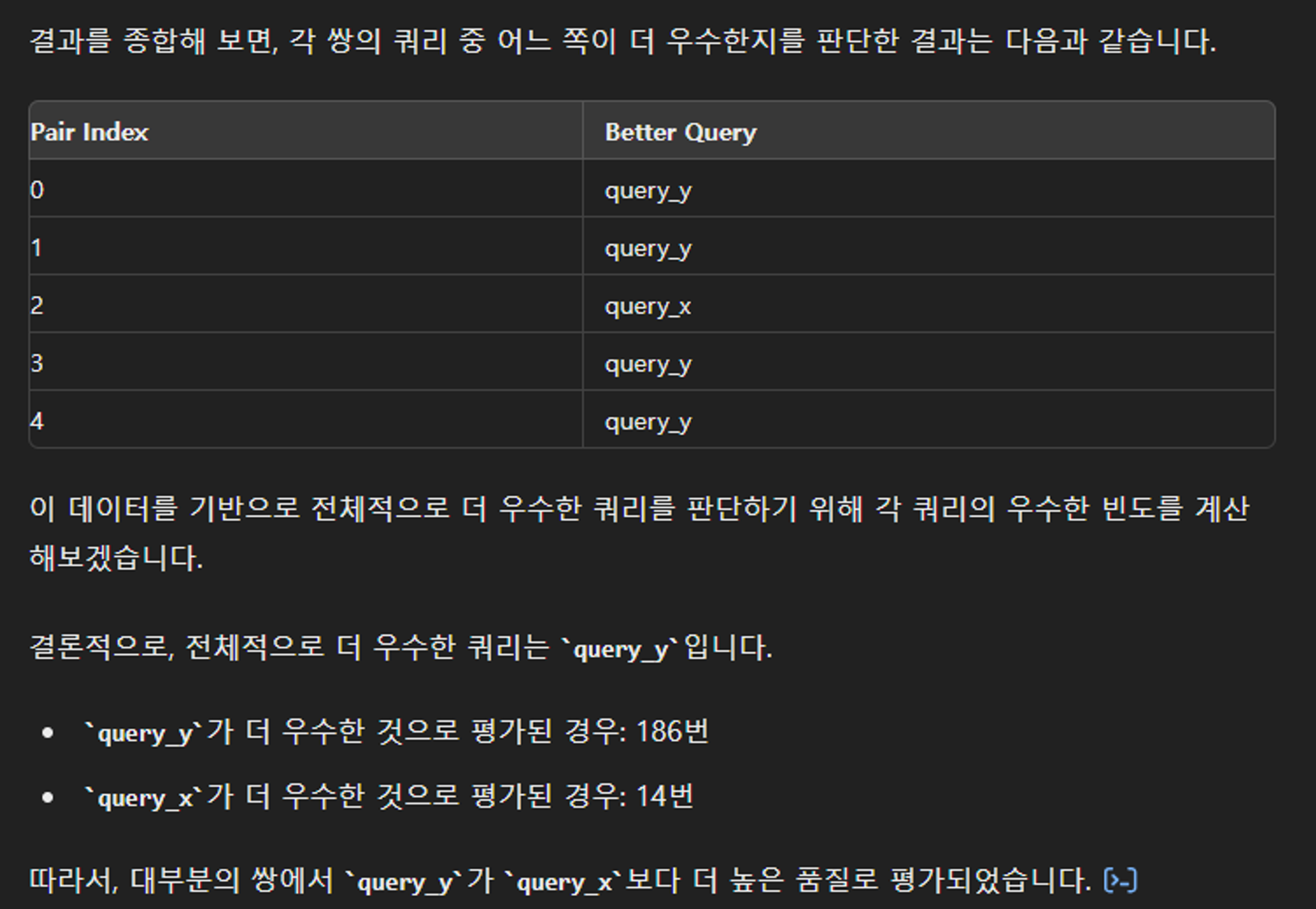

- qa_prompt_test.csv

- 질문 & 답변의 질 판단 → ChatGPT

- 첨부파일 = qa_prompt_test.csv

“첨부파일은 RAG 평가지표로 사용할 QA dataset에 관한 내용이다. query_x 와 query_y를 비교하여 더 우수한 품질의 질문을 선택하려고 할 때 적절한 지표들을 사용하여 두 질문들을 정량적으로 평가해서 더 우수한 질문이 query_x인지 query_y인지 판단해줘. 시각화도 함께 해서 보여줘.”